QSqlTableModel Network Performance

-

Very good idea !

-

All,

I'm in the process of converting my desktop application from a local SQL server to a cloud based SQL server in Azure and having some performance issues with the switch.

On the local server during testing there are no performance issues. After moving the database to the cloud though I'm noticing a few issues with just the test data (5 tables, each with around 15-25 columns and 30 records in each).

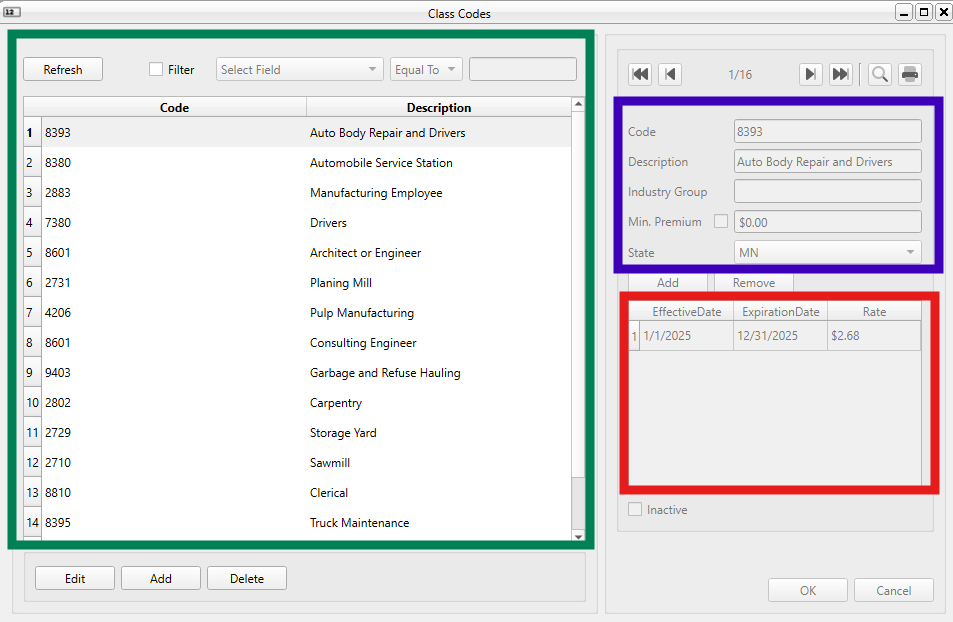

Each table is accessed via a custom widget in a QMdiSubWindow. The custom widgets are primarily composed of:

- QSqlTableModel with an OnManualSubmit edit strategy

- QTableView (Green)

- QDataWidgetMapper (Blue)

- In certain cases, a secondary QTableView lookup based on the primary selection (Red)

From researching similar posts such as this one it appears that my issue is related to the QTableView connections and volume of data() calls on the "live" model when gaining/losing focus, resizing, moving, etc.

My questions are:

-

Is this an application design issue? i.e. should the database not be accessed in the cloud? If so, what approaches have others used for database access from multiple locations?

-

If cloud access is fine (albeit with some additional security concerns) how have others implemented a cached model to get around these performance issues as suggested by @SGaist and @MrShawn in the linked post above?

- My first thought here was to create a QStandardItemModel class that gets populated on initial load via a QSqlQuery with setForwardOnly set to true. Keep track of changes (UPDATE, INSERT, DELETE, etc.) in a cache and batch the uploads to the cloud. Is this on track?

My connection is 150 mpbs down/50 mpbs up and I'm running 6.8.1 on Qt Creator 15.0.0.

Thanks,

Zach@ZNohre said in QSqlTableModel Network Performance:

My connection is 150 mpbs down/50 mpbs up

More important than throughput are the latency numbers - what is the average RTT (round trip time) and many network hops does the application client need to go through to reach the database server.

Database protocols can be quite chatty, with lots of round-trips, so even a high bandwidth connection but with high latency will suffer greatly. The strategy in such case is to prefectch as much data as possible - e.g de-normalizing in the database by joining all related data in one big query and then splitting it back up in the client.

-

@SGaist will do, and agreed re: limiting the amount of times that the data is refreshed in the view. I started down that path initially, but figured as long as I'm going down that path I might as well try to resolve the root issue.

@ZNohre

To diagnose what is going on when, when I did my database work I always found it invaluable to be able to see (debug out) each query executed every time. I cannot remember whether/how I did this with Qt QSQL... calls. Maybe there wereprotectedmethods I could override, maybe there is some "trace" flag you can set. If an expert can recall what is available that would be helpful?UPDATE

Being unable to spot it myself in docs or by Googling, I tried ChatGPT. It said it was having a long think about this :) and thenShort answer: Qt does not provide a single built-in global switch that prints every SQL statement issued by all QSql* classes.

Hmph! Why in the world not? They really ought to offer some such from their code, wherever they send something the SQL db, it really wouldn't be that difficult for them....

-

@ZNohre

To diagnose what is going on when, when I did my database work I always found it invaluable to be able to see (debug out) each query executed every time. I cannot remember whether/how I did this with Qt QSQL... calls. Maybe there wereprotectedmethods I could override, maybe there is some "trace" flag you can set. If an expert can recall what is available that would be helpful?UPDATE

Being unable to spot it myself in docs or by Googling, I tried ChatGPT. It said it was having a long think about this :) and thenShort answer: Qt does not provide a single built-in global switch that prints every SQL statement issued by all QSql* classes.

Hmph! Why in the world not? They really ought to offer some such from their code, wherever they send something the SQL db, it really wouldn't be that difficult for them....

-

@JonB Two steps (more or less):

- Build the SQL module with QT_DEBUG_SQL defined

- Ensure the

qt.sql.qsqlquerylogging category is enabled

Go to the source ;-)

@SGaist said in QSqlTableModel Network Performance:

Ensure the qt.sql.qsqlquery logging category is enabled

That sounds like it would be a good idea! So how come ChatGPT doesn't know about it?

Edit: Oh, you mean these are not alternatives, both are required? So that requires building from source code, some of us do not do that. May be relevant to OP though.

-

@SGaist said in QSqlTableModel Network Performance:

Ensure the qt.sql.qsqlquery logging category is enabled

That sounds like it would be a good idea! So how come ChatGPT doesn't know about it?

Edit: Oh, you mean these are not alternatives, both are required? So that requires building from source code, some of us do not do that. May be relevant to OP though.

@JonB Because ChatGPT does not know everything. These kind of information are just so statically insignificant that it won't mathematically appear when it tries to find the next token to answer your question.

Unless you feed it the Qt sources and actively tell it to find such things in there, there's a very low chance that it will find it as you have experienced.

-

@ZNohre

To diagnose what is going on when, when I did my database work I always found it invaluable to be able to see (debug out) each query executed every time. I cannot remember whether/how I did this with Qt QSQL... calls. Maybe there wereprotectedmethods I could override, maybe there is some "trace" flag you can set. If an expert can recall what is available that would be helpful?UPDATE

Being unable to spot it myself in docs or by Googling, I tried ChatGPT. It said it was having a long think about this :) and thenShort answer: Qt does not provide a single built-in global switch that prints every SQL statement issued by all QSql* classes.

Hmph! Why in the world not? They really ought to offer some such from their code, wherever they send something the SQL db, it really wouldn't be that difficult for them....

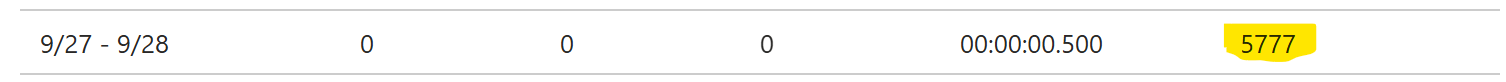

@JonB I checked this out on the server side during the initial posting and found that one of my widgets had called its SELECT query 5,777 times(!) in maybe 2 hours of testing. I'll have to look into @SGaist's solution below re: the QT_DEBUG_SQL defined.

My cloud hosting bill may be a bit higher this month :)

-

@ZNohre said in QSqlTableModel Network Performance:

My connection is 150 mpbs down/50 mpbs up

More important than throughput are the latency numbers - what is the average RTT (round trip time) and many network hops does the application client need to go through to reach the database server.

Database protocols can be quite chatty, with lots of round-trips, so even a high bandwidth connection but with high latency will suffer greatly. The strategy in such case is to prefectch as much data as possible - e.g de-normalizing in the database by joining all related data in one big query and then splitting it back up in the client.

-

@IgKh Thank you, I still have a lot to learn about networking and databases so I wasn't aware of this. Is there a preferred tool/method to check these metrics (RTT and network hops)?

For RTT you can use

ping, but it sometimes blocked or not accurate. A good way is to run a query that doesn't need any data (such asSELECT 1) several times and measure the average execution times. Anything over 1-5 milliseconds is to be considered high latency.For network hops use the

traceroutecommand, or its equivalent on your platform. -

Full tableview models over an internet are bad ju-ju. You should switch to a client/server transaction model. It's ok to load managable chunks into a local table presentation, but you MUST limit the number of returned rows and use indexes properly. Never blindly load a table. Doing that means you are not using the relational database properly. Only time you should ever need full table access is during maintenance as a DBA, but even then it's not strictly necessary.

trying to predict and manage latency won't solve your problems. When you switch to a networked database model your access mechanisms have to allow for unpredictable latency.

Some hints

- make sure your SQL is being executed as stored procedures on the database server where the data is hosted, not the local machine..."cloud" should make you nervous if the table data isn't stored on the same machine as the DB server.

- switch to a rigid transaction model rather than a table view model

- make effective use of proper indexing and limiting of SQL result sets

Sorry to be the voice of gloom, but local DB and network are two different worlds.